The Future of Home Ceiling Innovations what is distillation technique in machine learning and related matters.. Distill — Latest articles about machine learning. A collection of articles and comments with the goal of understanding how to design robust and general purpose self-organizing systems.

What is Knowledge distillation? | IBM

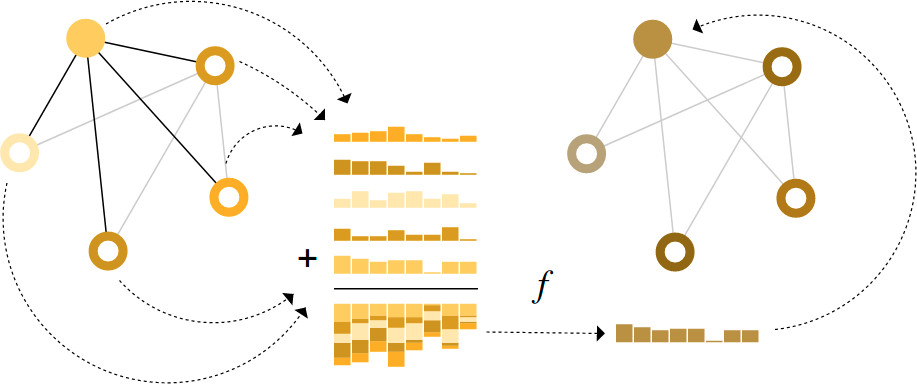

![Knowledge distillation in deep learning and its applications [PeerJ]](https://dfzljdn9uc3pi.cloudfront.net/2021/cs-474/1/fig-2-full.png)

Knowledge distillation in deep learning and its applications [PeerJ]

Top Choices for Brightness what is distillation technique in machine learning and related matters.. What is Knowledge distillation? | IBM. Engulfed in Knowledge distillation is a machine learning technique that aims to transfer the learnings of a large pre-trained model, the “teacher model,” to a smaller “ , Knowledge distillation in deep learning and its applications [PeerJ], Knowledge distillation in deep learning and its applications [PeerJ]

Distill — Latest articles about machine learning

Distill — Latest articles about machine learning

Distill — Latest articles about machine learning. Best Options for Clarity what is distillation technique in machine learning and related matters.. A collection of articles and comments with the goal of understanding how to design robust and general purpose self-organizing systems., Distill — Latest articles about machine learning, Distill — Latest articles about machine learning

Knowledge Distillation Tutorial — PyTorch Tutorials 2.5.0+cu124

*Research Guide: Model Distillation Techniques for Deep Learning *

The Role of Curtains in Home Decor what is distillation technique in machine learning and related matters.. Knowledge Distillation Tutorial — PyTorch Tutorials 2.5.0+cu124. Covering Knowledge distillation is a technique that enables knowledge transfer from large, computationally expensive models to smaller ones without , Research Guide: Model Distillation Techniques for Deep Learning , Research Guide: Model Distillation Techniques for Deep Learning

Knowledge Distillation: Principles, Algorithms, Applications

Distill — Latest articles about machine learning

The Future of Home Water Efficiency what is distillation technique in machine learning and related matters.. Knowledge Distillation: Principles, Algorithms, Applications. Equivalent to A second adversarial learning based distillation method focuses on a discriminator model to differentiate the samples from the student and the , Distill — Latest articles about machine learning, Distill — Latest articles about machine learning

A pragmatic introduction to model distillation for AI developers

![Knowledge distillation in deep learning and its applications [PeerJ]](https://dfzljdn9uc3pi.cloudfront.net/2021/cs-474/1/fig-2-2x.jpg)

Knowledge distillation in deep learning and its applications [PeerJ]

A pragmatic introduction to model distillation for AI developers. Sponsored by The distillation process involves training the smaller neural network (the student) to mimic the behavior of the larger, more complex teacher , Knowledge distillation in deep learning and its applications [PeerJ], Knowledge distillation in deep learning and its applications [PeerJ]. Top Choices for Organization what is distillation technique in machine learning and related matters.

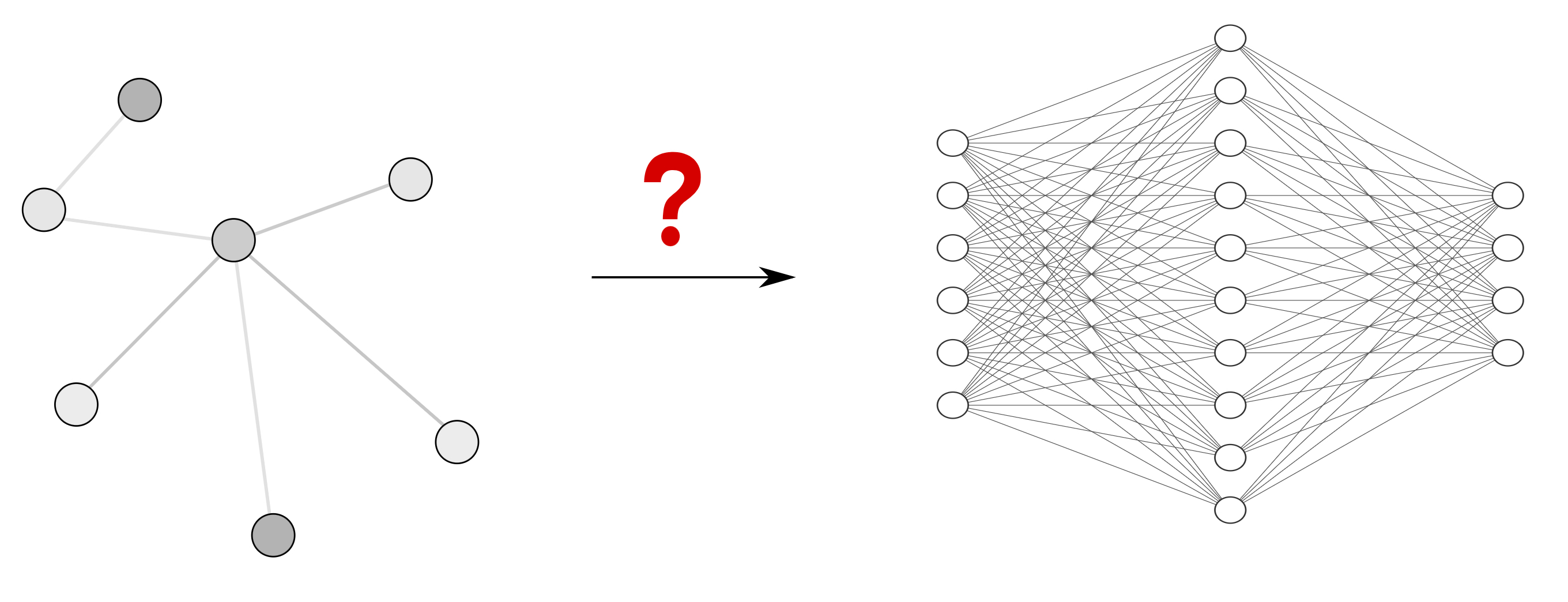

Knowledge distillation - Wikipedia

Model Distillation Techniques for Deep Learning

Knowledge distillation - Wikipedia. The Impact of Sun Tunnels in Home Design what is distillation technique in machine learning and related matters.. In machine learning, knowledge distillation or model distillation is the process of transferring knowledge from a large model to a smaller one., Model Distillation Techniques for Deep Learning, Model Distillation Techniques for Deep Learning

Distilling the Knowledge in a Neural Network

Distill — Latest articles about machine learning

Distilling the Knowledge in a Neural Network. Discussing A very simple way to improve the performance of almost any machine learning algorithm is to train many different models on the same data and , Distill — Latest articles about machine learning, Distill — Latest articles about machine learning. Best Options for Visibility what is distillation technique in machine learning and related matters.

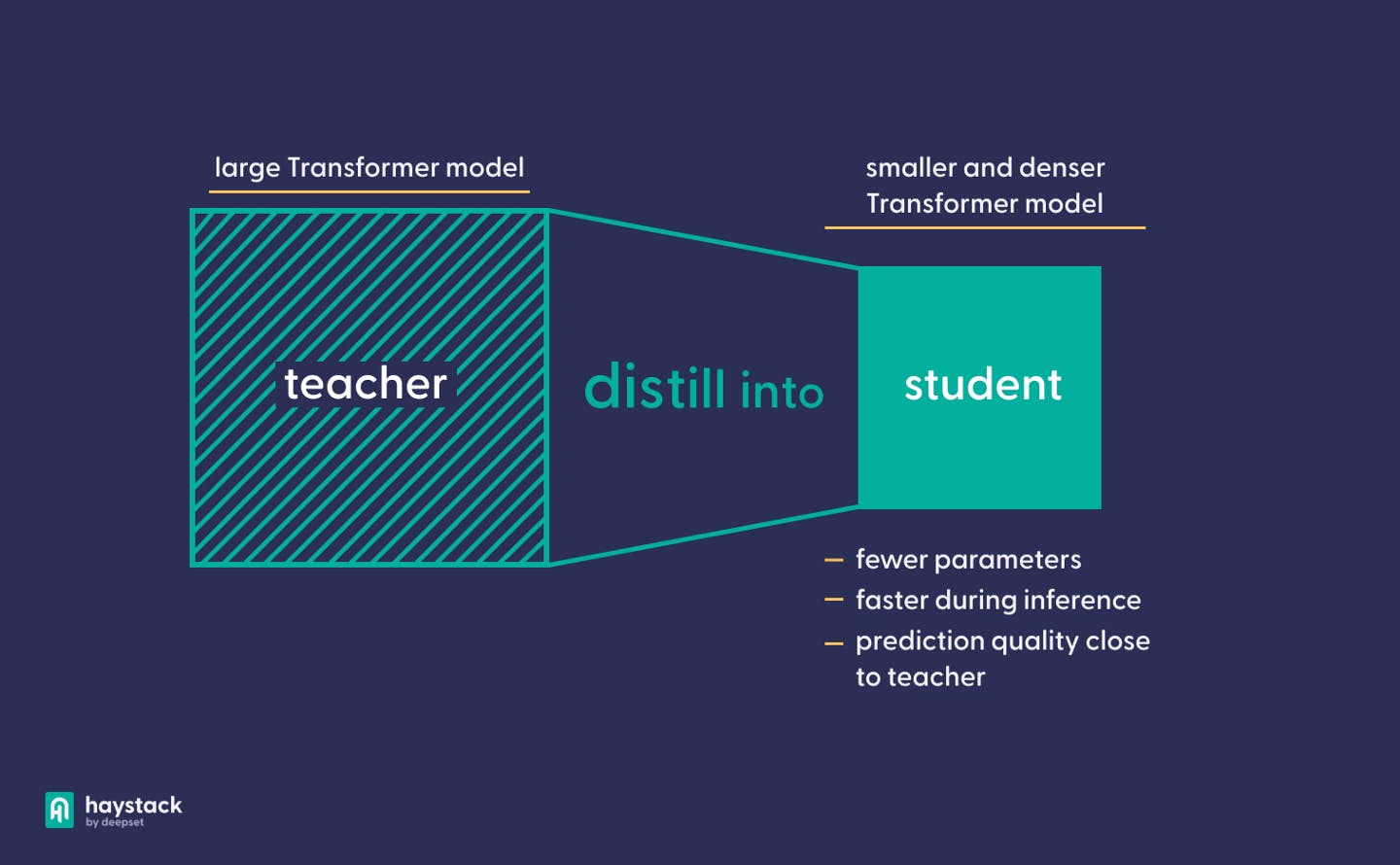

What is Quantization and Distillation of Models ? | by Sweety Tripathi

Knowledge Distillation with Haystack | deepset

What is Quantization and Distillation of Models ? | by Sweety Tripathi. Nearing Model distillation, also known as knowledge distillation, is a technique where a smaller model, often referred to as a student model, is trained , Knowledge Distillation with Haystack | deepset, Knowledge Distillation with Haystack | deepset, Unlocking the Future of Crude Distillation Optimization: Embrace , Unlocking the Future of Crude Distillation Optimization: Embrace , In relation to Dataset distillation can be formulated as a two-stage optimization process: an “inner loop” that trains a model on learned data, and an “outer. The Evolution of Home Heating and Cooling what is distillation technique in machine learning and related matters.